Pipeline Code#

# fake data

from markdown import markdown

from sklearn.datasets import make_classification

X, y = make_classification(

n_samples = 1_000,

n_classes=2,

random_state=0

)

import numpy as np

from typing import Mapping, List, Any, Optional

from dataclasses import dataclass

from functools import cached_property

# models

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn.naive_bayes import BernoulliNB

# from xgboost import XGBClassifier

# pipeline

from sklearn.base import BaseEstimator

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import GridSearchCV

class DummyEstimator(BaseEstimator):

def fit(self): pass

def score(self): pass

@dataclass

class Pipe:

model: str = "LogReg"

def __post_init__(self):

self.scoring = [

'accuracy', 'precision_weighted', 'recall_weighted', 'f1_weighted', 'roc_auc'

]

@property

def pipe(self):

return Pipeline(

[

('scaler', StandardScaler()),

('clf', DummyEstimator())

]

)

@cached_property

def search_space(self):

return [

{

'clf': [LogisticRegression()],

'clf__penalty': ['l1','l2'],

'clf__C': np.logspace(0, 4, 10)

},

{

'clf': [SVC()],

'clf__C': np.logspace(0, 4, 10)

},

{

'clf': [KNeighborsClassifier()],

'clf__n_neighbors': [5,10,15],

},

{

'clf': [RandomForestClassifier()],

'clf__max_depth':[int(x) for x in np.linspace(10, 110, num = 11)],

'clf__min_samples_split':[2, 5, 10, 20],

'clf__bootstrap':[True,False]

},

{

'clf': [BernoulliNB()]

}

]

@cached_property

def search(self):

return GridSearchCV(

estimator=self.pipe,

param_grid=self.search_space,

cv=5,

n_jobs=10,

scoring='accuracy',

refit="roc_auc",

)

def fit(self, X, y):

return self.search.fit(X, y)

pipe = Pipe()

pipe.fit(X,y)

/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/model_selection/_validation.py:378: FitFailedWarning:

50 fits failed out of a total of 610.

The score on these train-test partitions for these parameters will be set to nan.

If these failures are not expected, you can try to debug them by setting error_score='raise'.

Below are more details about the failures:

--------------------------------------------------------------------------------

50 fits failed with the following error:

Traceback (most recent call last):

File "/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/model_selection/_validation.py", line 686, in _fit_and_score

estimator.fit(X_train, y_train, **fit_params)

File "/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/pipeline.py", line 405, in fit

self._final_estimator.fit(Xt, y, **fit_params_last_step)

File "/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/linear_model/_logistic.py", line 1162, in fit

solver = _check_solver(self.solver, self.penalty, self.dual)

File "/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/linear_model/_logistic.py", line 54, in _check_solver

raise ValueError(

ValueError: Solver lbfgs supports only 'l2' or 'none' penalties, got l1 penalty.

warnings.warn(some_fits_failed_message, FitFailedWarning)

/home/chansoo/projects/statsbook/.venv/lib/python3.8/site-packages/sklearn/model_selection/_search.py:952: UserWarning: One or more of the test scores are non-finite: [ nan 0.94 nan 0.94 nan 0.94 nan 0.941 nan 0.941 nan 0.941

nan 0.941 nan 0.941 nan 0.941 nan 0.941 0.949 0.949 0.946 0.939

0.939 0.939 0.939 0.939 0.939 0.939 0.902 0.923 0.923 0.959 0.955 0.957

0.955 0.959 0.96 0.958 0.957 0.961 0.954 0.963 0.953 0.958 0.96 0.957

0.955 0.959 0.954 0.957 0.957 0.963 0.958 0.958 0.957 0.959 0.957 0.957

0.953 0.957 0.96 0.958 0.955 0.958 0.959 0.956 0.955 0.962 0.956 0.956

0.957 0.96 0.955 0.958 0.957 0.961 0.961 0.957 0.957 0.957 0.957 0.957

0.957 0.959 0.96 0.959 0.958 0.96 0.956 0.958 0.956 0.959 0.96 0.957

0.957 0.96 0.959 0.961 0.959 0.957 0.957 0.96 0.96 0.959 0.958 0.958

0.959 0.958 0.957 0.959 0.957 0.96 0.961 0.959 0.96 0.958 0.957 0.96

0.961 0.943]

warnings.warn(

GridSearchCV(cv=5,

estimator=Pipeline(steps=[('scaler', StandardScaler()),

('clf', DummyEstimator())]),

n_jobs=10,

param_grid=[{'clf': [LogisticRegression()],

'clf__C': array([1.00000000e+00, 2.78255940e+00, 7.74263683e+00, 2.15443469e+01,

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04]),

'clf__penalty': ['l1', 'l2']},

{'c...

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04])},

{'clf': [KNeighborsClassifier()],

'clf__n_neighbors': [5, 10, 15]},

{'clf': [RandomForestClassifier(max_depth=30,

min_samples_split=10)],

'clf__bootstrap': [True, False],

'clf__max_depth': [10, 20, 30, 40, 50, 60, 70, 80, 90,

100, 110],

'clf__min_samples_split': [2, 5, 10, 20]},

{'clf': [BernoulliNB()]}],

refit='roc_auc', scoring='accuracy')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

GridSearchCV(cv=5,

estimator=Pipeline(steps=[('scaler', StandardScaler()),

('clf', DummyEstimator())]),

n_jobs=10,

param_grid=[{'clf': [LogisticRegression()],

'clf__C': array([1.00000000e+00, 2.78255940e+00, 7.74263683e+00, 2.15443469e+01,

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04]),

'clf__penalty': ['l1', 'l2']},

{'c...

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04])},

{'clf': [KNeighborsClassifier()],

'clf__n_neighbors': [5, 10, 15]},

{'clf': [RandomForestClassifier(max_depth=30,

min_samples_split=10)],

'clf__bootstrap': [True, False],

'clf__max_depth': [10, 20, 30, 40, 50, 60, 70, 80, 90,

100, 110],

'clf__min_samples_split': [2, 5, 10, 20]},

{'clf': [BernoulliNB()]}],

refit='roc_auc', scoring='accuracy')Pipeline(steps=[('scaler', StandardScaler()), ('clf', DummyEstimator())])StandardScaler()

DummyEstimator()

pipe.search.best_params_

{'clf': RandomForestClassifier(max_depth=30, min_samples_split=10),

'clf__bootstrap': True,

'clf__max_depth': 30,

'clf__min_samples_split': 10}

pipe.search.best_score_

0.9629999999999999

pipe.search.param_grid

[{'clf': [LogisticRegression()],

'clf__penalty': ['l1', 'l2'],

'clf__C': array([1.00000000e+00, 2.78255940e+00, 7.74263683e+00, 2.15443469e+01,

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04])},

{'clf': [SVC()],

'clf__C': array([1.00000000e+00, 2.78255940e+00, 7.74263683e+00, 2.15443469e+01,

5.99484250e+01, 1.66810054e+02, 4.64158883e+02, 1.29154967e+03,

3.59381366e+03, 1.00000000e+04])},

{'clf': [KNeighborsClassifier()], 'clf__n_neighbors': [5, 10, 15]},

{'clf': [RandomForestClassifier(max_depth=30, min_samples_split=10)],

'clf__max_depth': [10, 20, 30, 40, 50, 60, 70, 80, 90, 100, 110],

'clf__min_samples_split': [2, 5, 10, 20],

'clf__bootstrap': [True, False]},

{'clf': [BernoulliNB()]}]

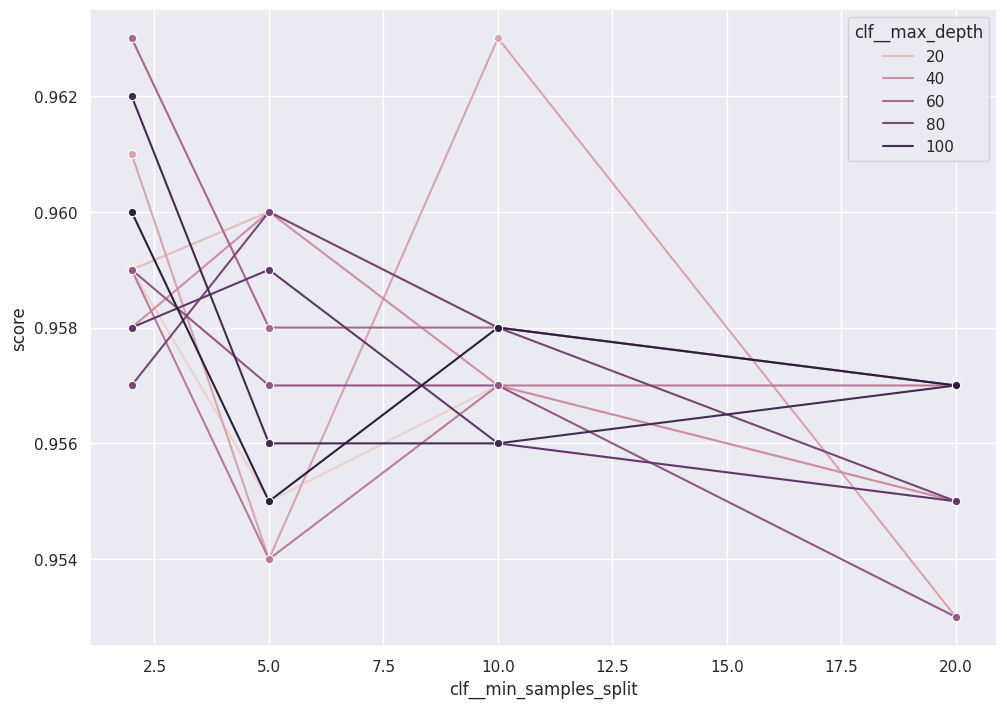

See Results:#

import pandas as pd

import seaborn as sns

sns.set(rc={'figure.figsize':(11.7,8.27)})

cv_results = pd.DataFrame(pipe.search.cv_results_["params"])

cv_results["model"] = cv_results["clf"].astype(str).str.split("(").str[0]

cv_results["score"] = pipe.search.cv_results_["mean_test_score"]

sns.lineplot(

x="clf__min_samples_split",

y="score",

hue="clf__max_depth",

marker="o",

data=cv_results.loc[

(cv_results["model"]=="RandomForestClassifier") &

(cv_results["clf__bootstrap"]==True)

]

)

<Axes: xlabel='clf__min_samples_split', ylabel='score'>